[[{“value”:”

We have said it before, and we will say it again as everyone is chewing on the financial results that Nvidia just turned in for its third quarter of fiscal 2025 ended in October. Nvidia does not have to be in any hurry to deliver its “Blackwell” GPU platforms, which are rumored to be having a new set of delays, because companies will still be eager to pay for platforms based on the prior generation of “Hopper” GPUs. It takes a little more than twice as many Hopper GPUs to do the work of a Blackwell GPU, but the Blackwell GPU only drives around 1.7X more revenue and is, by virtue of its advanced manufacturing, probably more costly to create.

In other words, Nvidia can rake in a lot more money selling H100 and H200 GPUs than it can pushing B100 and B200 devices, and so if there is a delay because of a flaw in the Blackwell masks (which was fixed and delayed the ramp by a few weeks) and if there is a another delay now because some components are supposedly overheating, so what? The problems will be fixed, and in the meantime Nvidia co-founder and chief executive officer can drag dump truck loads of money to Silicon Valley Bank each and every minute for the foreseeable future and deposit it into the Big Green checking account.

And that is, metaphorically speaking, what Nvidia did in Q3 F2025.

In the quarter, Nvidia had total sales of $35.01 billion, up 93.6 percent from the year ago quarter, with operating income up 89.2 percent to $21.87 billion and net income up 108.9 percent to $19.31 billion. Despite ongoing investments in research and development, share buybacks, and higher general costs, Nvidia was still able to end the quarter with $38.49 billion in cash in the bank. That cash hoard is up $3.69 billion from the prior quarter and up by more than 2X since this time last year.

Nvidia has financial breathing room like it has never had in its corporate life.

In the October quarter, Nvidia’s gaming card revenues – remember when Nvidia was a gaming company with a tiny datacenter business? – was $4.05 billion, up 16.4 percent. The vast majority of its revenues, in this case $31.04 billion and up by a factor of 2.1X year on year and up 17.4 percent sequentially, came from its Compute & Networking group.

Not all compute is in the datacenter at Nvidia, even though most of it is, and sometimes gaming devices are used in datacenter products. So Compute & Networking group revenues are always a little different from Datacenter division revenues.

During Q3 F2025, Nvidia’s datacenter revenues were $30.77 billion, up 2.1X year on year and up 17.1 percent sequentially. We reckon based on statements that Collette Kress, Nvidia’s chief financial officer, made on a call going over the number with Wall Street analysts, that around 49.5 percent of the datacenter revenues came from sales to “cloud service providers,” which works out to $15.23 billion. This is up by a factor of 2X compared to a year ago and is driven by the clouds installing clusters that span tens of thousands of GPUs for which renters can buy timeslices. Other companies, like pure hyperscalers like Meta Platforms as well as enterprise, government, and academic customers, comprised the remaining $15.54 billion in revenues. Both halves of the datacenter business more than doubled, but the clouds grew faster sequentially even if they did grow slower year on year.

Thankfully, Nvidia has been breaking out datacenter compute from datacenter networking sales in recent quarters, so now we know and we do not have to guesstimate as we have had to do for years.

As shown above, the compute part of the datacenter pie still dominates and reflects the level of investment in HPC/AI supercomputers when it comes to compute and networking. In the quarter, compute drove $27.64 billion in revenues, up 2.2X year on year and up 22.3 percent sequentially. Networking was up 51.8 percent to $3.13 billion, but fell 14.7 percent sequentially. Our model shows InfiniBand sales were up only 15.2 percent year on year and actually fell by 27.3 percent sequentially, and accounted for $1.87 billion. Ethernet networking, which is driven mostly by some big Spectrum-X clusters like the one installed by xAI in its Memphis datacenter to lash together 100,000 H100 GPUs, nearly tripled in the quarter and Spectrum-X for AI sales more than tripled. We think that works out to $1.26 billion in sales for all Ethernet products in Q3 F2025.

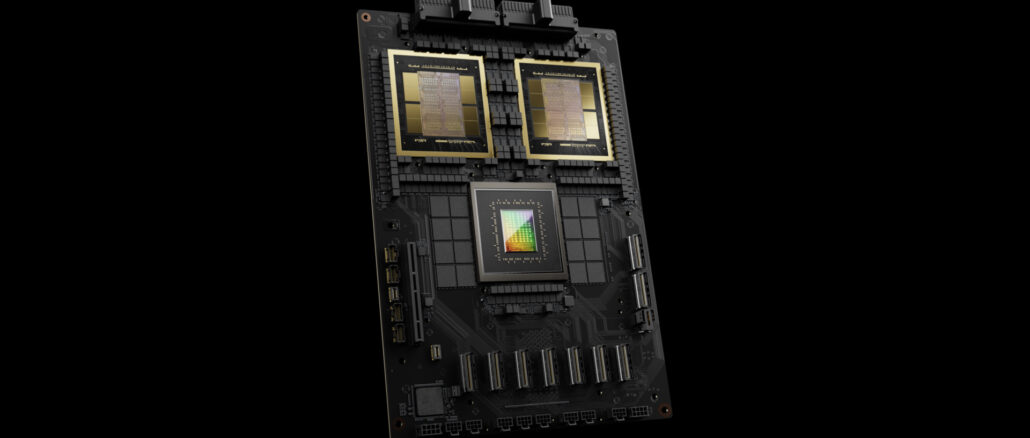

Kress gave a little color on datacenter sales in the quarter, saying that the H200 accelerator, which drove “double digit billions” in sales in Q3, was the fastest ramping product in the company’s history. Amazon Web Services, Microsoft Azure, and Coreweave have all launched instances based on the H200. She said further that the engineering changes to the Blackwell masks were done, said nothing at all about the rumored heating issues with Blackwell (which leads us to believe they are hogwash unsourced rumors aimed at manipulating the Nvidia stock price). Kress added that Blackwell is in full production and that over 13,000 Blackwell GPU samples shipped to partners in the quarter. Microsoft Azure will be the first one out the door with instances based on the GB200 hybrid Grace CPU-Blackwell GPU platform.

“Blackwell demand is staggering and we are racing to meet the demands that customers are placing on us,” she said on the call.

Jensen Huang, Nvidia co-founder and chief executive officer, put some color on this enormous demand. In addition to the “normal” scaling up of performance by adding GPUs to be able to process trillions of tokens across trillions of parameters, new foundation models are also doing more processing on their GPUs with post-training refinement learning with AI feedback using synthetic data. On top of this, model builders are creating “chain of thought” models that mimic the kind of deep and sometimes serendipitous and random thinking we do when engaged in deep thought or creative thinking, which is known as inference time scaling. The next result, Huang said, is that the state of the art foundation models built earlier this year based on Hopper GPUs topped out at 100,000 devices, but these next generation models are starting out with 100,000 Blackwell GPUs – and will presumably scale up from there.

Nvidia has shipped “billions” of dollars of Blackwell platforms in Q3 and will drive an increasing amount of revenue per quarter from the product line in subsequent quarters into fiscal 2026. (The speculation is that the crossover might happen in April or May where Nvidia is making more money from Blackwell than from Hopper.)

“I think we are in great shape with the Blackwell roadmap,” Huang said when poked about it on the call given the mask issue and the rumors. “Everything is on track as far as I know.”

And given how Huang runs Nvidia, he knows and he tells.

“}]]